If you are extracting data from Oracle, Attunity driver helps you extract it faster. But if you are extracting millions of rows from Oracle and you think it is slower than anticipated, here are few tips. These advanced tips will help you drastically improve the performance.

Advanced Tips to Improve Performance

Right click on Oracle Data Source and click on “Show Advanced Editor…”.

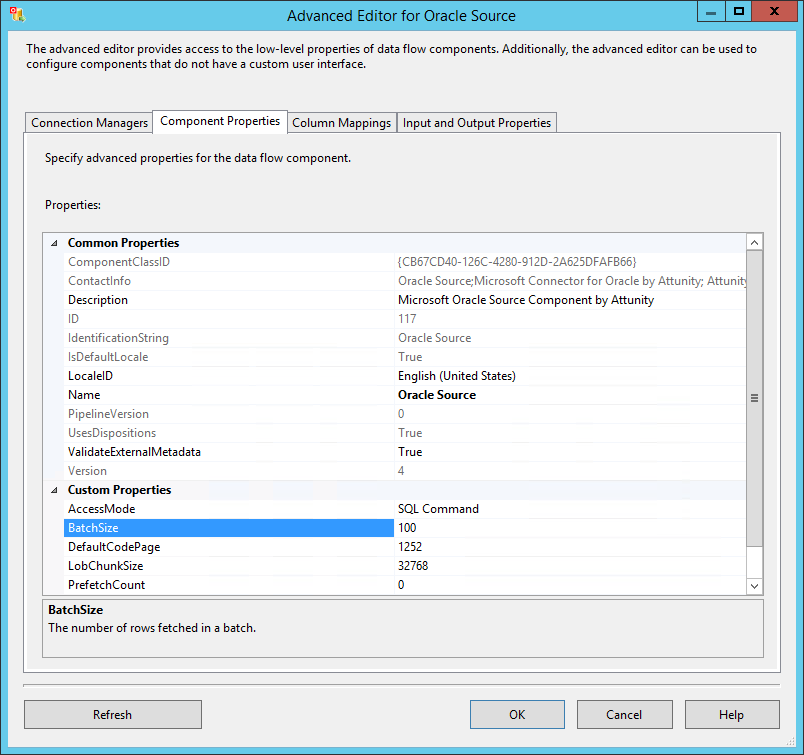

Go to “Component Properties” tab and under “Custom Properties” > “BatchSize”.

The default batch size is 100 which means that as soon as 100 rows are fetched, it will be sent to SSIS pipeline.

If you are using a cloud environment, sending a record batch has extra overhead. You will not notice the difference with few thousand rows but if you fetching millions of rows, you will notice performance degradation.

Instead of using Batch Size of 100, increase the Batch Size value to 10,000 and you will feel the performance difference. 10,000 is my magic number and it worked in various situations for me but you may have to find your own magic number by experimenting with it.

Leave a Reply